Before the AI Flood

The first neuroscience study of ChatGPT use reveals a pattern of cognitive suppression, exposing an urgent need for guardrails in how we design and use these tools.

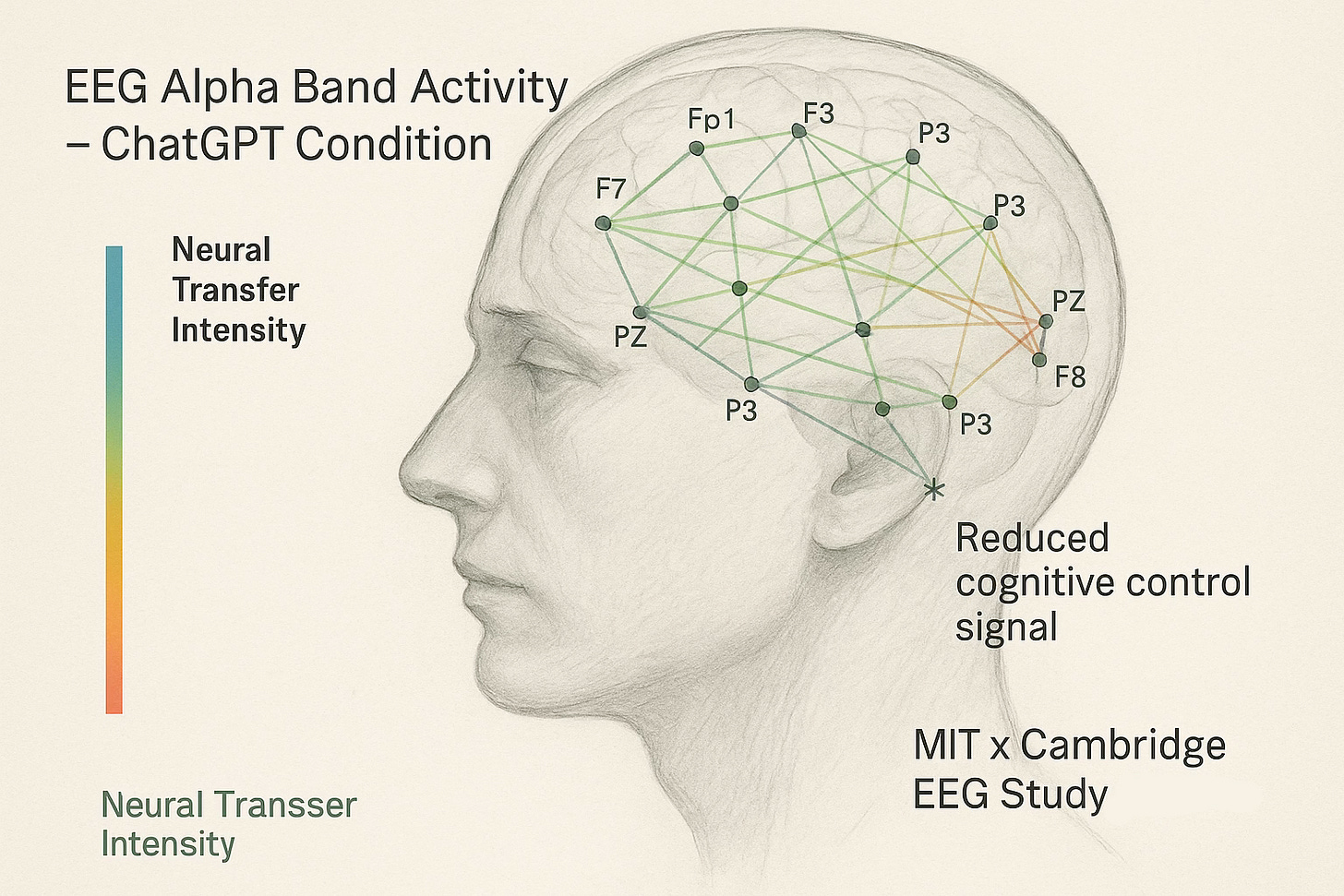

MIT-led researchers just released the first neuroscience study on ChatGPT use. Some commentators are overstating its findings, while others are brushing them off entirely. The results don’t predict long-term impairment, but they do raise legitimate design concerns. EEG scans showed a sharp drop in beta-band activity while participants wrote with AI. Mental effort flagged, memory engagement thinned, and cognitive control frayed. Even after the AI was taken away, the cognitive suppression lingered. The authors call it "cognitive debt": a measurable drop in mental effort and engagement that persisted into the next 10-minute writing task, showing that even minutes after the AI was removed, users were less cognitively active while writing on their own.

It's not long-term impairment—more like a limp that fades quickly—but it still signals something real: the cost of convenience often shows up not in the task you outsource, but in the one that comes after, when your cognitive muscles don’t fire the same way.

It’s not framed as a warning, but arriving now, on the eve of AI-native devices hitting the market in 2026, it lands like one.

That silence? It’s the moment the agency quietly slips into automation.

The study involved 46 students writing essays with and without ChatGPT, while researchers tracked EEG data and self-reported engagement.

This echoes past tech debates—the early outline of something we’re only beginning to understand. When social media first arrived, it promised to connect us, empower us, and democratize expression. And for a while, it did. However, optimism faded as anxiety surged, attention fragmented, and identity formation faltered under the pressure of algorithms. We also watched as disinformation spread, elections destabilized, and populations were nudged into paranoia and rage—features, not glitches, of the systems we’d entrusted with our public discourse. Now the same architects are entering the AI race with the same playbook: optimize for engagement first, ethics later—if at all. That’s the pattern that should stop us cold.

The Cognitive Stakes Are Higher This Time

This shift is even more intimate. It’s not just our behavior at risk—it’s our cognition: how we think, remember, struggle, and make meaning. 2026 won’t just be the year AI-native devices go mainstream. It will mark the beginning of a generation raised not beside technology, but inside it. Devices that don’t just respond—they script, nudge, and override, often before we know what we were reaching for. Tools that might assist us, or gradually assume authorship. And we’re still not ready.

No exposure guidelines. No developmental scaffolds. No standards at all. And we’re handing this power to the same social platforms—now second-tier AI players—who built their empires by prioritizing engagement over civic stability. Their CEOs sat front row at the President’s inauguration. And one of them isn’t just a platform owner—he’s also the President. That’s not a metaphor. It’s a milestone in the collapse of the boundary between state power and algorithmic influence. Many have documented records of amplifying disinformation and eroding trust.

Has any of this earned public trust? The federal government may face renewed pressure to regulate AI’s reach, though past efforts with social media came late and remain fragmented.

Why This Moment Matters

This isn’t a call to ban AI. It’s a call to name what’s being lost. When automation embeds itself in cognition, we lose track of what’s missing—not because it’s gone, but because the struggle that made it visible has been removed. The result? Thinking without authorship. Remembering without effort. Identity assembled from suggestion, not reflection. And a generation that may never learn to think without a prompt.

We need to draw a line between AI as a muse and AI as a mask.

If we don’t, shallow use will become the norm, and the norm will shape how the next generation learns to think.

This isn’t abstract. The kids using these tools in 2026 will be the first generation raised with AI as a mirror, not just a machine.

There’s no handbook for parents, no training for teachers, no policies for voters—unless we create them.

Some companies are at least making a gesture toward restraint. Anthropic is designing constitutional models with alignment principles built in. OpenAI is exploring memory and reflection layers to support intentional use. Apple remains committed to a privacy-first, device-contained design. Microsoft is integrating ethical frameworks into enterprise platforms. These are promising signals. But we’ve heard this performance before—ethics as theater, long enough to gain trust. Then the dials turn. Compliance bends. And harm is framed as progress.

Building the Manual Before the Defaults Lock In

We need a researched "operating manual"—before AI-native minds are shaped without friction, without reflection, and without the muscle memory of making meaning for ourselves.

That means:

Developmental guidance based on age and cognitive readiness. Children aged 7–12 need structured reflection. Teens need authorship scaffolds. Adults need tools that deepen awareness, not erase effort.

A taxonomy of use that distinguishes reflection from replacement, emulation from delegation, and studies their impact on memory, motivation, and identity.

Research investment into long-term cognitive outcomes, including support for groups like MATS (ML Alignment & Theory Scholars).

Ethical UX defaults that add friction where it matters: pause prompts, authorship nudges, memory cues. Interfaces that protect cognition, not bypass it.

The signals are flashing. The tools are already in the room. What’s missing is public pressure, institutional memory, and shared frameworks for safe use.

Let’s not wait for the next Haidt or Twenge to tally the damage in hindsight.

Let’s not let another generation pay the price for someone else’s quarterly gain.

Let’s start building the manual now—not out of fear, but because history rarely offers a second chance. If we get this right, maybe our kids will laugh at how scared we were—because we taught them to use the machine and still know where they end.

Before the defaults are locked in.

Before the flood.

Suggested Reading

Essays by the Author:

Related Research and Frameworks:

Your Brain on ChatGPT — MIT, Cambridge, Wellesley, MassArt

MATS (ML Alignment & Theory Scholars): Technical alignment training and research infrastructure